• New Falcon 2 11B Outperforms Meta’s Llama 3 8B, and Performs on par with leading Google Gemma 7B Model, as Independently Verified by Hugging Face Leaderboard

• Immediate Plans Include Exploring 'Mixture of Experts' for Enhanced Machine Learning Capabilities

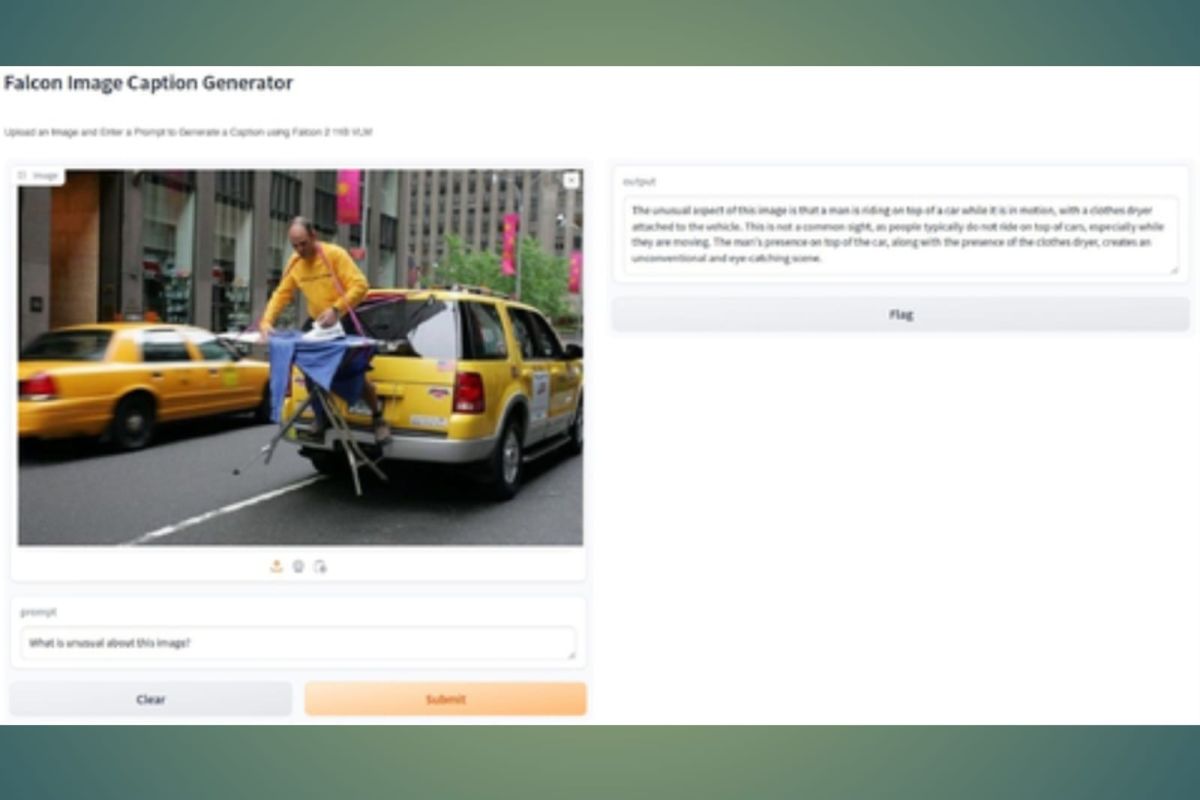

Abu Dhabi, United Arab Emirates--(ANTARA/Business Wire)-- The Technology Innovation Institute (TII), a leading global scientific research center and the applied research pillar of Abu Dhabi’s Advanced Technology Research Council (ATRC), today launched a second iteration of its renowned large language model (LLM) – Falcon 2. Within this series, it has unveiled two groundbreaking versions: Falcon 2 11B, a more efficient and accessible LLM trained on 5.5 trillion tokens with 11 billion parameters, and Falcon 2 11B VLM, distinguished by its vision-to-language model (VLM) capabilities, which enable seamless conversion of visual inputs into textual outputs. While both models are multilingual, notably, Falcon 2 11B VLM stands out as TII's first multimodal model – and the only one currently in the top tier market that has this image-to-text conversion capability, marking a significant advancement in AI innovation.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20240513516248/en/

Tested against several prominent AI models in its class among pre-trained models, Falcon 2 11B surpasses the performance of Meta’s newly launched Llama 3 with 8 billion parameters (8B), and performs on par with Google’s Gemma 7B at first place (Falcon 2 11B: 64.28 vs Gemma 7B: 64.29), as independently verified by Hugging Face, a US-based platform hosting an objective evaluation tool and global leaderboard for open LLMs. More importantly, Falcon 2 11B and 11B VLM are both open-source, empowering developers worldwide with unrestricted access. In the near future, there are plans to broaden the Falcon 2 next-generation models, introducing a range of sizes. These models will be further enhanced with advanced machine learning capabilities like 'Mixture of Experts' (MoE), aimed at pushing their performance to even more sophisticated levels.

All of TII’s AI models released to date have consistently ranked in the top tier globally, as the most powerful open-source LLMs. The new scaled-down and versatile Falcon 2 11B models are set to give TII greater market adoption in the ever-evolving world of generative AI.

Falcon 2 11B models, equipped with multilingual capabilities, seamlessly tackle tasks in English, French, Spanish, German, Portuguese, and various other languages, enriching their versatility and magnifying their effectiveness across diverse scenarios. Falcon 2 11B VLM, a vision-to-language model, has the capability to identify and interpret images and visuals from the environment, providing a wide range of applications across industries such as healthcare, finance, e-commerce, education, and legal sectors. These applications range from document management, digital archiving, and context indexing to supporting individuals with visual impairments. Furthermore, these models can run efficiently on just one graphics processing unit (GPU), making them highly scalable, and easy to deploy and integrate into lighter infrastructures like laptops and other devices.

H.E. Faisal Al Bannai, Secretary General of ATRC and Strategic Research and Advanced Technology Affairs Advisor to the UAE President, said: "With the release of Falcon 2 11B, we've introduced the first model in the Falcon 2 series. While Falcon 2 11B has demonstrated outstanding performance, we reaffirm our commitment to the open-source movement with it, and to the Falcon Foundation. With other multimodal models soon coming to the market in various sizes, our aim is to ensure that developers and entities that value their privacy have access to one of the best AI models to enable their AI journey."

Speaking on the model, Dr. Hakim Hacid, Executive Director and Acting Chief Researcher of the AI Cross-Center Unit at TII, said: “AI is continually evolving, and developers are recognizing the myriad benefits of smaller, more efficient models. In addition to reducing computing power requirements and meeting sustainability criteria, these models offer enhanced flexibility, seamlessly integrating into edge AI infrastructure, the next emerging megatrend. Furthermore, the vision-to-language capabilities of Falcon 2 open new horizons for accessibility in AI, empowering users with transformative image to text interactions.”

The versatility of Falcon 2 11B has also led TII to consider working on more exciting GenAI innovations. Among these will be the adoption of a new type of machine learning capability known as the aforementioned ‘Mixture of Experts’. This method involves amalgamating smaller networks with distinct specializations, ensuring that the most knowledgeable domains collaborate to deliver highly sophisticated and customized responses – almost like having a team of smart helpers who each know something different and work together to predict or make decisions when needed. This approach not only improves accuracy, but it also accelerates decision-making, paving the way for more intelligent and efficient AI systems.

Falcon 2 11B is licenced under TII Falcon License 2.0, the permissive Apache 2.0-based software license which includes an acceptable use policy that promotes the responsible use of AI. More information on the new model can be found at FalconLLM.TII.ae.

Source: AETOSWire

View source version on businesswire.com: https://www.businesswire.com/news/home/20240513516248/en/

Contacts

Jennifer Dewan, Senior Director of Communications

Source: The Technology Innovation Institute

Reporter: PR Wire

Editor: PR Wire

Copyright © ANTARA 2024